You’re not famous until they put your head on a pez dispenser. Or something. Image from pez.com; hopefully advertisement isn’t copyright infringement…

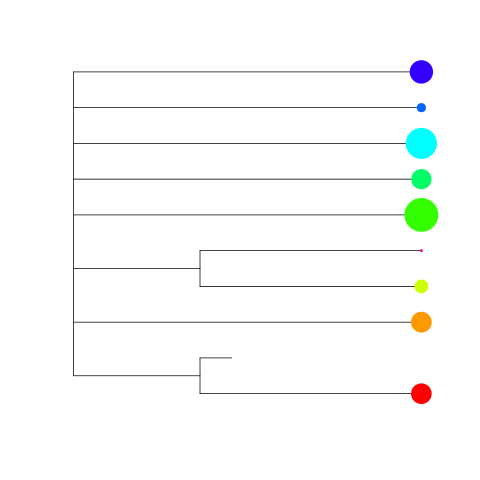

I have a new R package up on CRAN now (pez: Phylogenetics for the Environmental Sciences). We worked really hard to make sure the vignette was informative, but briefly if you’re interested in measuring:

- a combined data structure for phylogeny, community ecology, trait, and environmental data (comparative.comm)

- phylogenetic structure (e.g., shape, dispersion, and their traitgram options; read my review paper)

- simulating phylogenies and ecological assembly (e.g., scape, sim.meta, ConDivSim)

- building a phylogeny (phy.build)

- applying regression methods based on some Jeannine Cavender-Bares and colleagues (eco.xxx.regression, fingerprint.regression)

…there’s a function in there for you. For what it’s worth, I’m already using the package a lot myself, so there is at least one happy user already. I had a lot of fun working on this, mostly because all the co-authors are such lovely people. This includes the people who run CRAN – I was expecting a hazing for any kind of minor mistake, but they’re all lovely people!

I learnt a few things while getting this ready to go which might be of interest if, like me, you’re very naive as to how to do collaborative projects well. I don’t think much of this is R-specific, but here are things that I was surprised by the importance of…

- People are sometimes too nice.

- So you have to needle them a bit to be constructively nasty. Some (I’m looking at you, Steve Walker) are so nice that they feel mean making important suggestions for improvements. Some feel things are lost in writing them down and prefer talking over Skype (e.g., me), others are quicker over email.

- Everyone has different skills, and you have to use them. Some write lots of code, others write small but vital pieces, some check methods, others document them, and some will do all of that but be paranoid they’ve done nothing. Everyone wants to feel good about themselves, and if you don’t tell them what you want from them, they won’t be happy!

- Be consistent about methods.

- I love using GitHub issues, but that meant the 5% of the time I was just doing something without making an issue about it… someone else was doing the same thing at the same time. Be explicit!

- If you’re going to use unit tests make sure everyone knows what kind of tests to be writing (checking values of simulations? checking return types?…), and that they always run them before pushing code. Otherwise pain will ensue…

- Whatever you do, make sure everyone has the same version of every dependency. I imagine at least one person has made some very, very loud noises about my having an older version of roxygen2 installed…

- Have a plan.

- There will never be enough features, because there will never be an end to science. Start tagging things for ‘the next version’; you’ll be glad of it later.

- Don’t be afraid to say no. Some things are ‘important’, but if no one cares enough to write the code and documentation for it, it will never get done. So just don’t do it!